jgedde

Well-Known Member

- Joined

- Jan 17, 2013

- Messages

- 214

- Reaction score

- 122

Performing a bit of thread necromancy here, and moving a previously point-to-point conversation out here, in case it benefits someone else who scratches their head about this:

I'm interested in using this design to make the ignitions on ancient chunks of farm machinery that we use mostly for show purposes, more reliable. I don't want to significantly alter the engines, so using the points in place, rather than cobbling in a hall sensor and magnet carrier seems like a reasonable approach.

I'm wondering however, whether the dwell time at cranking speed with points, runs afoul of the "You're boring, I'm going to sleep" timeout you've built into the circuit. Not relishing the thought of what some of my battery-ignition, but hand-crank started engines would do, if the timeout effectively looks like super-advanced timing, at cranking speed!

I actually hadn't thought about that at all, and now that you've made me think about it, I'm wondering why this circuit works with the hall effect sensors at all...

I had previously (erroneously) assumed that with the hall sensor, the design de-energized the coil when the hall sensor stopped sinking current (the transition from sensing the magnet, to not sensing the magnet), and energized the coil again immediately afterward. Obviously this can't be the case, partly because that would imply that the coil was running with almost a full 360deg dwell, which seems unlikely to be healthy, and also because Jgedde has repeatedly mentioned the safety timeout for the system stopping with the hall sensor over the magnet, but nothing about needing to worry about stopping with the sensor away from the magnet.

Just to be sure that I was right about having been wrong, I hooked it up to my 'scope and yup, the coil output is de-energized until the hall sensor sees the magnet. It then comes up, and remains up for the shorter of either approximately 20ms, or when the magnet leaves, then it drops again until the next time it sees the magnet.

So now I understand how the circuit works, but I don't understand _why_ it works. With the hall sensor, the dwell duration is only the period between when the hall sensor sees the magnet, and when the magnet leaves its sensing radius.

At any kind of realistic engine speed, for anywhere that I can think of that's easy to place magnets (i.e., flywheel, crank) that time gets down into the sub-millisecond range pretty quickly. With wild gesticulation instead of actual number-crunching, at 60RPM, 1 degree of revolution is about 3ms. It's been a long time since I worried about performance engines, but dim memory says that's just barely enough to build field in a coil on a particularly cheerful and optimistic day.

Assuming people are shooting for running speeds in the high hundreds to low-couple-thousand RPM, does this mean that you're finding some way to coat 50 or so degrees of some spinning component with magnets, to develop sufficient hall sensor on-time? Or is there some other magic at work here?

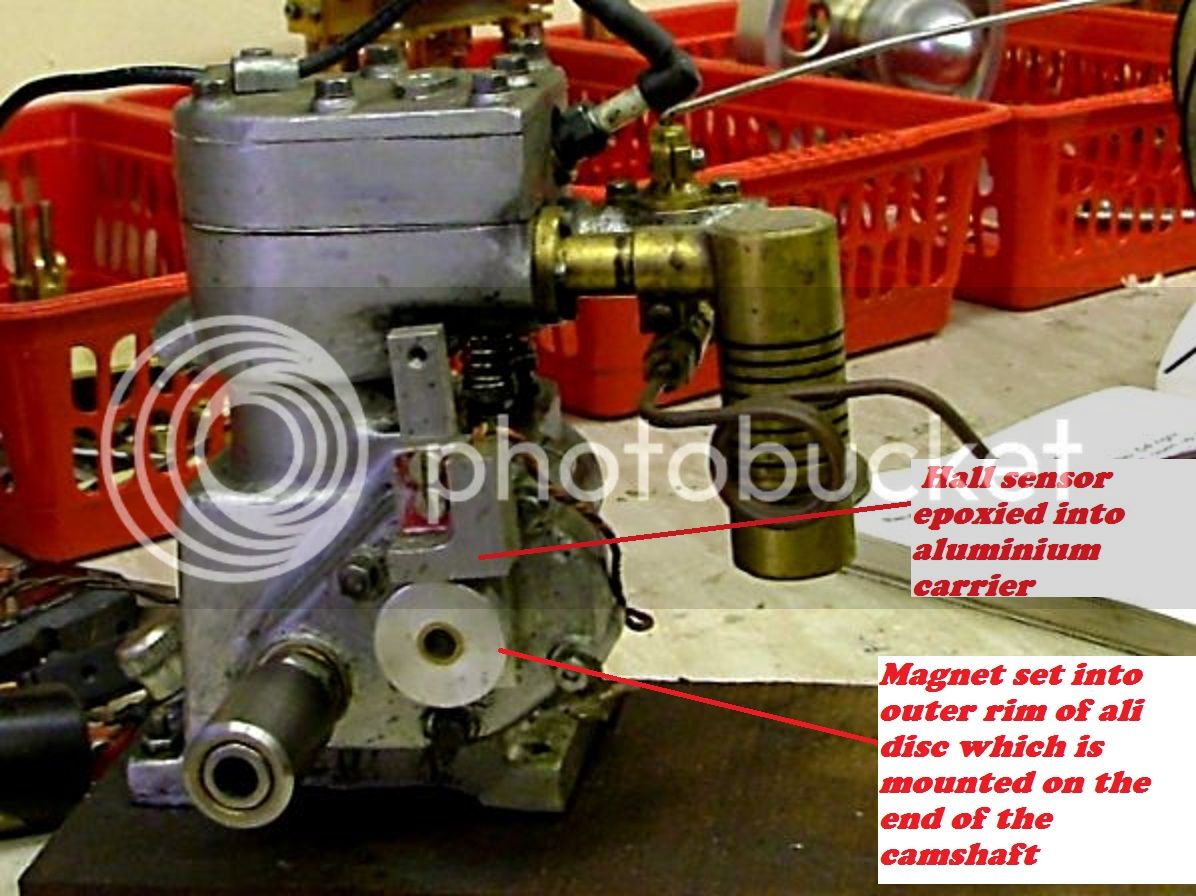

The trick is the magnet has to be wide enough to give the desired dwell time... On model engines, this isn't much of an issue since the size ratio between the magnet and whatever is driving it is likely to be large. On a full size engine, you need a scaled up magnet or just use the existing points to drive the circuit...

John